There’s a peculiar kind of silence that fills the gap between hearing music in your head and bringing it into the world. For decades, this silence has been the reality for millions of creative individuals who possessed musical ideas but lacked the technical toolkit to express them. The traditional path to music production—conservatory training, expensive equipment, years of practice—has functioned as an unintentional filter, determining whose musical visions get heard and whose remain locked away.

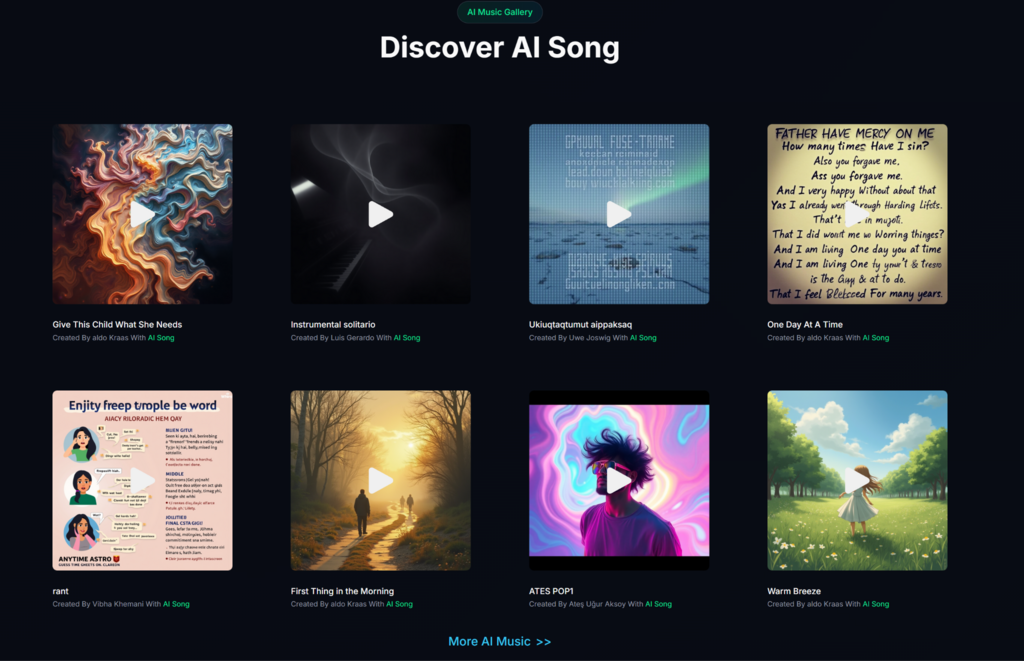

The emergence of AI-powered music generation tools, including platforms like theAI Song Generator, represents more than just technological advancement. It signals a fundamental restructuring of who gets to participate in music creation. This shift raises fascinating questions about creativity, accessibility, and the evolving relationship between human imagination and machine capability.

The Hidden Cost of Traditional Music Production

Beyond Financial Investment: The Time Barrier

When discussing barriers to music creation, conversations typically focus on financial costs—software licenses, hardware investments, studio time. But there’s an equally significant barrier that receives less attention: time.

Learning to produce music competently requires an enormous time investment. Mastering a Digital Audio Workstation takes months of dedicated practice. Understanding music theory well enough to compose coherent melodies demands years of study. Developing the ear training necessary to mix and master audio is a gradual process that can’t be rushed.

For working professionals, parents, students, or anyone juggling multiple responsibilities, this time requirement is prohibitive. The aspiring creator might have thirty minutes here, an hour there—fragments of time insufficient for climbing the steep learning curve of traditional production.

The Expertise Paradox

There’s a paradox at the heart of traditional music production: extensive technical knowledge is needed to express musical ideas, but acquiring that knowledge often dulls the spontaneous creativity that sparked the initial inspiration. By the time someone learns proper gain staging, compression ratios, and EQ curves, the raw emotional impulse that made them want to create music may have been buried under layers of technical thinking.

AI music generation sidesteps this paradox entirely. The emotional impulse can be translated directly into sound without the intermediary step of technical mastery.

Understanding the Technology Without the Hype

What AI Music Generation Actually Does

Stripping away marketing language, AI music generators function as sophisticated pattern-matching systems. They’ve analyzed vast quantities of existing music to identify relationships between musical elements—how certain chord progressions evoke specific emotions, which instrumental combinations define particular genres, what rhythmic patterns create different energy levels.

When a user inputs a text description, the system identifies keywords and contextual clues, then generates music incorporating patterns associated with those elements. It’s not “understanding” music in any human sense, but rather applying statistical likelihood to musical decision-making.

This doesn’t make the output worthless, but it does mean AI-generated music tends to sound familiar rather than revolutionary. The system excels at producing music that fits comfortably within established genres and conventions.

Practical Testing Across Different Use Cases

Podcast Production and Audio Branding

The podcasting landscape has exploded in recent years, with millions of shows competing for listener attention. Audio branding—distinctive intro music, transition sounds, outro themes—helps shows stand out and build recognition.

Traditionally, podcasters faced limited options: use royalty-free stock music that dozens of other shows also use, pay for custom composition (often $500-2000 for a single track), or attempt DIY production with limited skills.

Testing AI generation for podcast music reveals interesting results. For a true crime podcast, generating “mysterious, suspenseful music with subtle tension” produced several usable options within minutes. The tracks weren’t groundbreaking, but they were unique, professional-sounding, and perfectly adequate for the intended purpose.

The limitation: maintaining sonic consistency across multiple episodes requires careful prompt management. Generating music for episode one, then trying to create something that “sounds similar” for episode two often produces noticeably different results.

Educational Content and Learning Enhancement

Teachers and educational content creators face unique challenges. They need music that enhances learning without distracting, matches specific lesson moods, and doesn’t carry licensing restrictions that complicate classroom use.

A history teacher creating a video about ancient civilizations might need music that evokes historical periods. An AI prompt like “create ambient music with ancient-sounding instruments, mysterious and contemplative” can generate background audio that serves the educational purpose effectively.

Testing shows this works particularly well for instrumental background music. The moment lyrics or prominent melodies are needed, limitations become more apparent.

Platform Comparison: Features That Actually Matter

After extensive testing across multiple AI music platforms, certain features emerge as genuinely important while others prove to be marketing fluff.

| Feature | Why It Matters | AI Song Maker | Typical Competitors |

| Prompt Flexibility | Determines how naturally ideas can be described | Accepts conversational descriptions effectively | Often require specific terminology or structure |

| Generation Speed | Affects iteration workflow and experimentation | 30-90 seconds allows rapid testing | Slower speeds discourage experimentation |

| Output Consistency | Important for projects needing multiple related tracks | Moderate—requires careful prompting | Varies widely across platforms |

| Licensing Clarity | Critical for commercial or public use | Clearly stated royalty-free terms | Often buried in complex terms of service |

| Format Options | Affects post-production flexibility | Standard audio formats available | Some limit format choices on free tiers |

The Interface Philosophy Divide

Platforms fall into two distinct categories: those designed for people with music production knowledge, and those designed for complete beginners. The former offer more granular control but require understanding what those controls mean. The latter accept natural language descriptions but offer less precision.

Neither approach is inherently superior. The right choice depends on the user’s background and needs. Someone with music theory knowledge might find simplified interfaces frustrating, while a complete beginner might be overwhelmed by technical options.

The Honest Limitations

Genre Authenticity Varies Wildly

Testing across multiple genres reveals significant quality variation. AI systems handle certain styles—electronic music, ambient soundscapes, generic pop structures—quite well. Other genres, particularly those with strong cultural specificity or complex improvisational elements, produce less convincing results.

Generating “authentic New Orleans jazz” or “traditional Irish folk music” often yields something that hits surface-level markers but lacks the nuanced characteristics that define those genres. The AI recognizes patterns but doesn’t understand cultural context or historical evolution.

Emotional Depth Remains Elusive

AI-generated music can be pleasant, energetic, calm, or tense. What it struggles to achieve is genuine emotional depth—the quality that makes certain songs resonate personally, that creates moments of unexpected beauty or profound sadness.

This limitation isn’t necessarily a problem for functional music—background tracks for videos, ambient music for spaces, placeholder audio for projects. But it means AI generation isn’t replacing the emotional impact of carefully crafted human composition.

The Regeneration Requirement

Promotional materials often showcase perfect examples, but real-world use involves significant trial and error. Generating five to ten variations before finding something truly usable is common. This isn’t a fatal flaw—generation is fast enough that creating ten variations takes less time than browsing stock music libraries. But it’s worth understanding that the process involves curation and selection, not just description and immediate perfection.

Emerging Use Cases

Rapid Prototyping in Professional Contexts

Even professional composers are finding applications. When scoring a film or commercial, the early creative conversations often involve describing musical directions verbally. AI generation allows translating those descriptions into actual audio, facilitating clearer communication between composers, directors, and clients.

The AI-generated track isn’t the final product—it’s a communication tool that helps align creative vision before investing time in professional production.

Therapeutic and Wellness Applications

Mental health professionals and wellness practitioners are exploring AI music generation for creating personalized soundscapes. A meditation instructor might generate custom tracks matching specific session lengths and energy progressions. A therapist might create calming background music tailored to individual client preferences.

The advantage here is customization at scale. Rather than choosing from existing meditation music libraries, practitioners can generate exactly what each situation requires.

The Cultural Conversation

Redefining Musical Literacy

Historically, musical literacy meant reading notation, understanding theory, and performing instruments. AI generation suggests an expanded definition: understanding how to describe musical ideas effectively, recognizing quality in generated outputs, and knowing how to integrate AI-created elements into larger creative projects.

This shift parallels other technological changes. Photography didn’t eliminate the value of visual literacy—it changed what visual literacy means. Similarly, AI music generation doesn’t eliminate musical knowledge—it transforms which aspects of musical knowledge matter most.

The Professional Musician Perspective

Conversations with professional musicians reveal complex attitudes. Initial resistance often softens upon experimentation, but concerns about market impact remain valid. Entry-level composition work faces genuine disruption. However, work requiring deep expertise, cultural authenticity, or emotional sophistication remains firmly in human territory.

The likely outcome resembles other technological disruptions: market segmentation rather than wholesale replacement. AI serves certain needs while human expertise serves others, with a hybrid zone where both contribute.

The Practical Reality

For someone considering whether AI music generation has a place in their creative process, the answer depends entirely on context and goals. These tools excel at making functional music accessible—background tracks, placeholder audio, rapid concept exploration, and projects where “good enough” truly is good enough.

They struggle with applications requiring emotional depth, cultural authenticity, or the kind of artistic risk-taking that defines memorable music. Understanding these boundaries helps set appropriate expectations and identify where AI generation adds genuine value versus where it falls short.

The technology doesn’t replace musical creativity—it redistributes it, making certain aspects more accessible while highlighting the irreplaceable value of others. That redistribution creates both opportunities and challenges, and navigating them thoughtfully will define how AI music generation integrates into our creative future. The melody that once existed only in someone’s imagination can now find its way into the world, and that expansion of creative possibility represents progress worth exploring.