List Crawling Overview

In the modern digital world, data collection plays a vital role in decision-making, marketing, and analytics. Businesses rely heavily on large volumes of structured information to gain insights and identify patterns. The process of automatically gathering data from websites and online directories has become a cornerstone of digital research and automation. With the help of advanced tools and technologies, organizations can extract, filter, and organize information efficiently, reducing manual effort while improving accuracy and speed.

Understanding the Concept of Data Extraction

Data extraction refers to the automated process of collecting relevant information from web sources. This method is widely used in various industries, including e-commerce, real estate, finance, and market research. Instead of manually browsing through pages, automated systems collect data like names, email addresses, product prices, or contact details and store them in a structured format such as spreadsheets or databases. The process ensures efficiency and allows professionals to focus more on analysis rather than collection.

Also, explore Miami Dolphins vs Green Bay Packers Match Player Stats — Complete Breakdown

Importance of Automated Data Collection

Automation has transformed how businesses operate. Through intelligent web scraping methods, companies can monitor competitors, identify new trends, and gather insights into customer behavior. Automated systems ensure that data is updated in real time, which is essential for fast-paced industries where information changes rapidly. The integration of machine learning and AI algorithms enhances data accuracy, enabling businesses to make more informed decisions. As a result, automated extraction has become a critical part of digital transformation strategies worldwide.

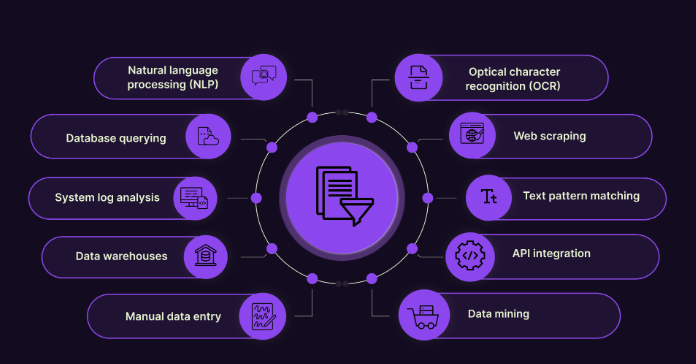

Common Applications of Data Extraction

The applications of automated data gathering are diverse and extend across multiple sectors. In marketing, professionals use these methods to build lead databases and identify potential clients. In e-commerce, they help track product listings, prices, and customer reviews. Researchers use data extraction for academic or technical analysis, while financial institutions rely on it for monitoring prices and economic indicators. The ability to process vast amounts of data quickly makes it a valuable resource for any data-driven organization.

Key Components and Tools Used

Modern extraction systems consist of several key components that work together for efficient operation. These include crawlers, which browse web pages automatically; parsers, which identify and extract specific elements; and data storage modules, which organize and save the collected information. Popular tools like Scrapy, BeautifulSoup, and Octoparse allow users to design and manage these processes without deep programming knowledge. Many advanced solutions also feature cloud-based integration, ensuring accessibility and scalability for enterprises.

Benefits of Using Automated Extraction Systems

Automated data collection systems offer numerous advantages. They eliminate the need for repetitive manual work and ensure consistent, reliable data. These systems enhance operational efficiency, reduce costs, and improve decision-making by providing access to up-to-date information. They are also scalable, allowing organizations to handle millions of records effortlessly. Additionally, automated tools minimize human error, providing more accurate and reliable datasets. The efficiency and adaptability of these systems make them indispensable in modern business environments.

Ethical and Legal Considerations

While automated data extraction offers immense benefits, it’s essential to use these systems responsibly. Organizations must respect data privacy laws such as the GDPR and ensure they only collect publicly available information. Ethical scraping practices involve obtaining data transparently and avoiding misuse of private or copyrighted material. Companies that prioritize compliance not only protect their reputation but also foster trust among clients and stakeholders. Responsible use of technology ensures that innovation continues to thrive within legal and ethical boundaries.

The Role of AI in Data Collection

Artificial intelligence has significantly improved the accuracy and speed of data extraction processes. AI-powered systems can identify relevant information more efficiently by learning from previous extractions. Through natural language processing and pattern recognition, they can even interpret unstructured data like articles or social media posts. AI also enables real-time updates and predictive analytics, making data more actionable. The combination of automation and intelligence ensures that organizations stay ahead in an increasingly competitive, data-centric world.

Conclusion

Automated data extraction has become an essential element of the digital economy. By combining technology, efficiency, and intelligence, it allows organizations to transform raw information into actionable insights. When used ethically and strategically, data extraction tools empower professionals to enhance productivity, refine marketing strategies, and make informed business decisions. As technology continues to evolve, intelligent automation will remain a driving force behind innovation and data-driven success.

FAQs

1. What is automated data extraction used for?

It is used to collect structured data from websites and digital sources for analysis, marketing, and research.

2. Are these systems suitable for all industries?

Yes, they are widely used across sectors such as finance, e-commerce, marketing, and academia.

3. Do I need coding skills to use extraction tools?

Not necessarily. Many tools provide user-friendly interfaces that require little to no programming knowledge.

4. Is automated data collection legal?

It is legal when done ethically and within the boundaries of public data usage policies.

5. How does AI improve data extraction?

AI enhances accuracy and speed by automating pattern recognition, filtering, and predictive data processing.